Photo by Glenn Carstens-Peters on Unsplash

Kubernetes Architecture for Backend Developers

A getting started guide.

Table of contents

Audience

This blog is intended for Full-Stack and Back-end developers who have some experience with building back-end applications and using container technologies such as Docker. Some understanding of basic networking is desired. Many network-level details have been omitted to give an application-level overview of Kubernetes Architecture. Some experience working with a Cloud Provider is helpful but not required. Basic Linux Administration knowledge will be desirable.

Components

Kubernetes abstracts applications, networking and other Infrastructure components as Objects in a programmable context. For example, in Kubernetes an application that runs on a container runs on a Pod that works on a Node, now this Node may be a VM, Container, or, an on-premises Server. This way of abstracting Infrastructure Components as programmable or user-modifiable components is part of Infrastructure as Code (IaC) a process of managing and provisioning computer data centres through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools.

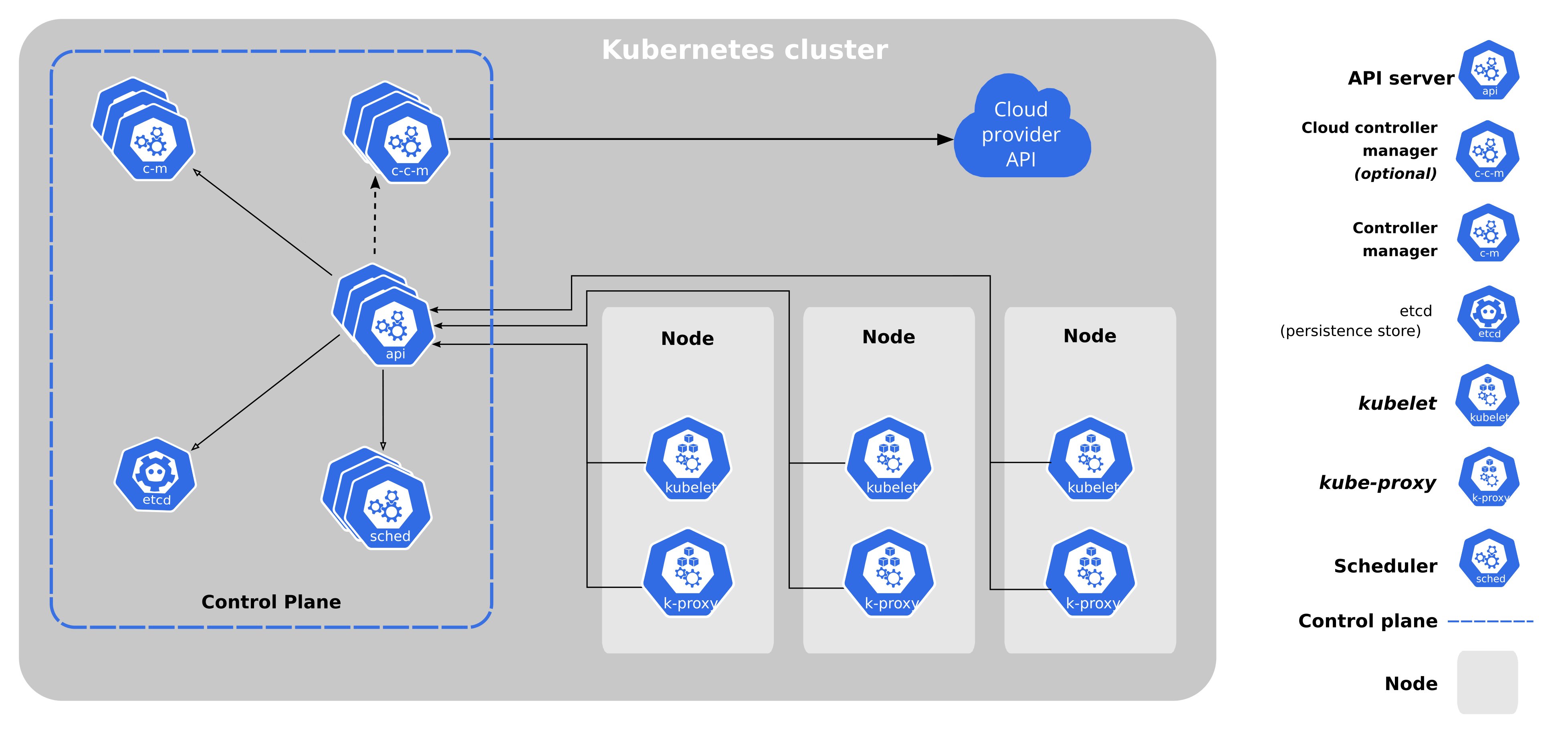

At a very high level, Kubernetes is a cluster of computing systems categorized by their distinct roles:

One or more control plane nodes.

One or more worker nodes.

Control Plane

The control plane node provides a running environment for the control plane agents responsible for managing the state of a Kubernetes cluster, and it is the brain behind all operations inside the cluster. It uses a highly available key-value data store etcd to manage the states of the cluster and its components.

What is etcd ?

etcd is a highly available, distributed, reliable and replicated key-value datastore that can be used to store configuration and state data across nodes in a distributed system. It by default uses TLS (Transport Layer Security) for data security and also allows users to configure SSL certificates. It provides easy access to developers as it can be accessed as HTTP-JSON objects.

Control Plane Components

A control plane node runs the following essential control plane components and agents:

API Server

Scheduler

Controller Managers

Key-Value Data Store.

In addition, the control plane node runs:

Container Runtime

Node Agent

Proxy

Optional add-ons for cluster-level monitoring and logging.

API Server: kube-apiserver

The API Server (kube-apiserver) is the main component that runs at the heart of the control pane node it provides access to RESTful API endpoints to users, developers, administrators and applications designed to work with Kubernetes to provide access to the underlying architecture.

During the processing, the current state of the cluster is stored in etcd. The API Server is the only control plane component to talk to the etcd store, both to read from and to save Kubernetes cluster state information - acting as a middle interface for any other control plane agent inquiring about the cluster's state.

Scheduler Unit: kube-scheduler

The role of the kube-scheduler is to assign new workload objects, such as pods encapsulating containers, to nodes - typically worker nodes. It takes information about cluster components, desired state(state of cluster as desired by the user) and current state(state of cluster right now) of all worker nodes. It runs a scheduling algorithm that takes charge of QoS(Quality of Service) requirements such as data availability, affinity, toleration etc.. and assigns priorities to worker nodes to select the node where new workload deployment should start. The outcome of the decision process is communicated back to the API Server, which then delegates the workload deployment with other control plane agents.

It helps faster deployment of application updates across nodes with no or least downtime.

The scheduler operation is extremely simple in single-node cluster. But, it becomes complicated in a multinode cluster.

Controller Managers

The controller managers are components of the control plane node running controllers or operational processes to regulate the state of the Kubernetes cluster. Controllers are watch-loop processes continuously running and comparing the cluster's desired state (provided by objects' configuration data) with its current state (obtained from etcd via the API Server). In case of a mismatch, corrective action is taken in the cluster until its current state matches the desired state.

kube-controller-manager

It constantly runs and runs jobs to ensure desired pod counts when nodes go down, API endpoints, service accounts etc.

cloud-controller-manager

It constantly runs and runs jobs to interact with the infrastructure of the underlying cloud provider to help manage nodes, storage volumes, load balancing etc..

Key-Value Data Store: More on etcd

In Development and Learning Environments

The etcd is configured to save the cluster state for each change. Since data is never deleted and only appended this allows the CLI tool etcdctl to provide snapshot save and restore capabilities that can be used to roll out and roll back changes without much hassle.

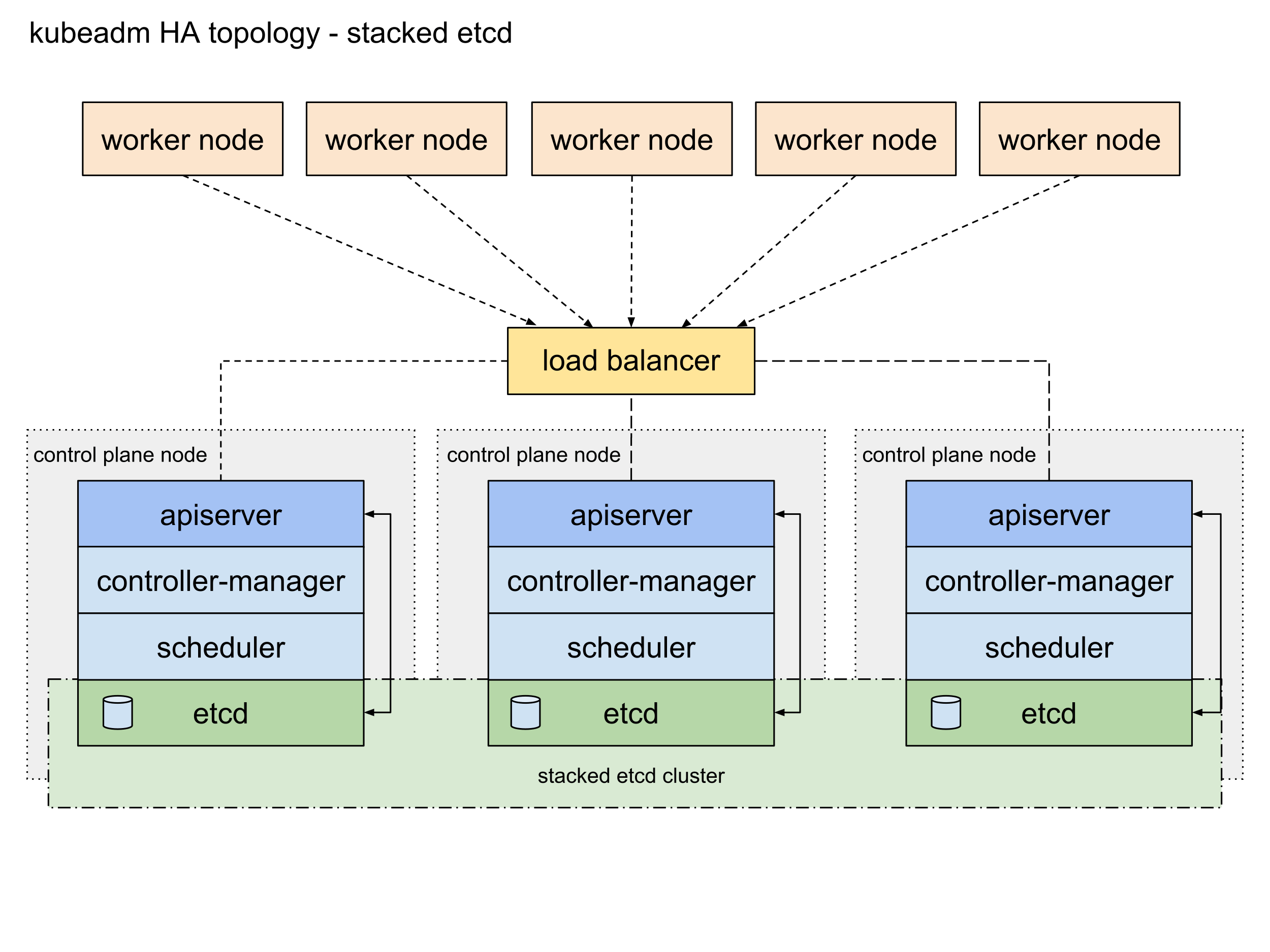

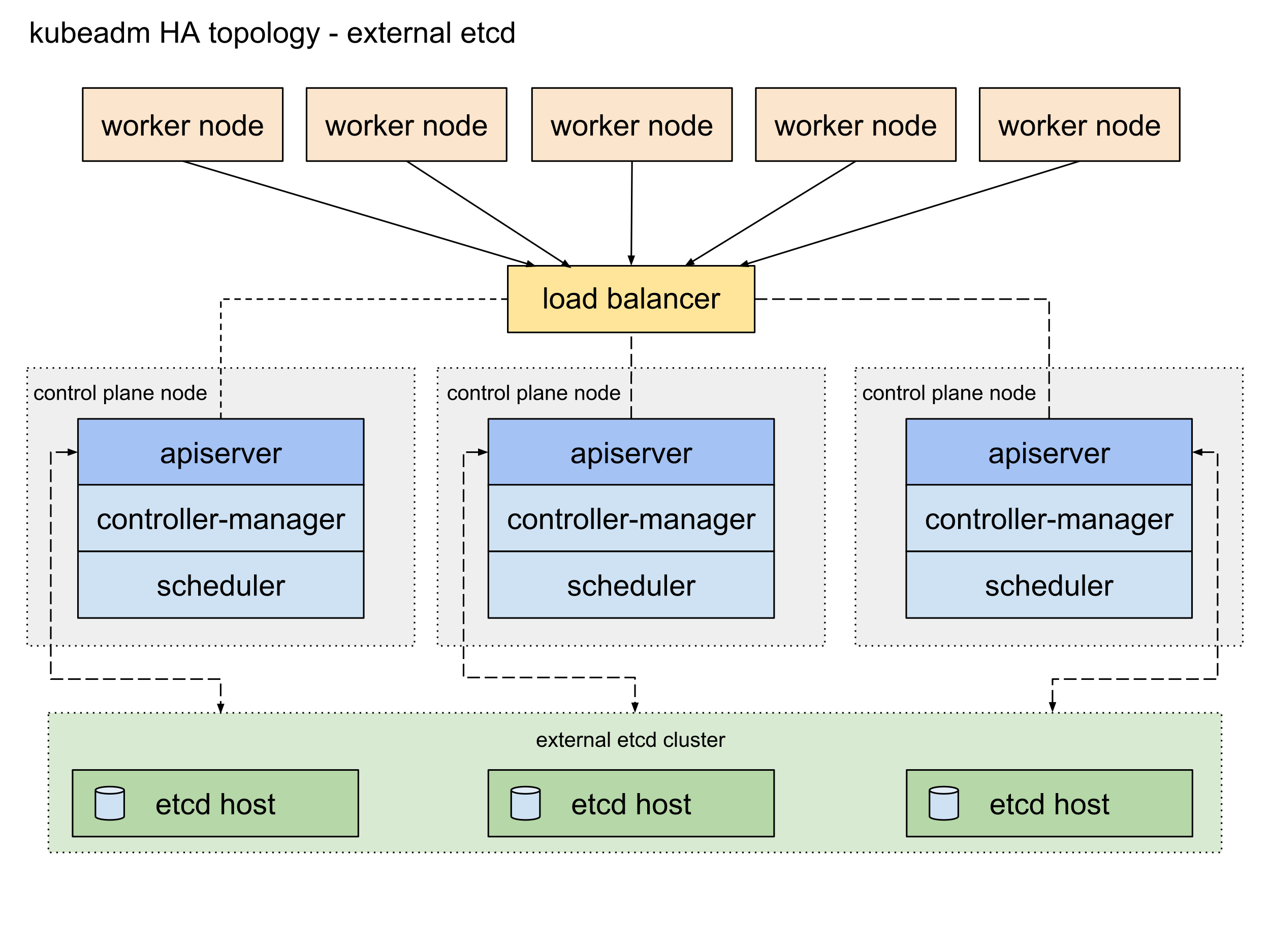

In Production and Staging Environments

These environments generally run multiple Control Plane Nodes. Thus, data resiliency is important in Production Environments and Staging Environments so, we replicate the data store in HA mode (High Availability mode). There are two possible configurations.

Stacked etcd Topology

External etcd Topology

Worker Nodes

A worker node is a node where client applications run in the cluster. The containers run inside a Pod. A Pod is the smallest scheduling unit in Kubernetes. It also provides encapsulation for Containerised Applications. A Pod may run one or more containers. Unless there is a specific use case, it is recommended to run one container per Pod.

Networking in Worker Nodes

In a single worker node cluster, all the traffic between the user and the application is routed via Control Plane Node.

In a multiple-worker node cluster the traffic is handled by Worker Nodes themselves.

Components of Worker Nodes

Container Runtime

Node Agent - kubelet

Proxy - kube-proxy

Addons for further services such as DNS, Dashboard UI, etc..

Container Runtimes

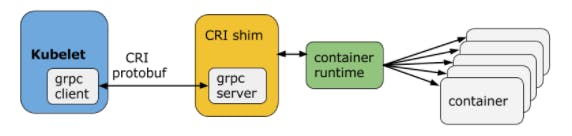

Although Kubernetes is used as a "container orchestration engine", it cannot directly handle and run containers. To manage a container's lifecycle, Kubernetes requires a container runtime on the node where a Pod and its containers are to be scheduled. Runtimes are required on all nodes of a Kubernetes cluster, both control plane and worker. Kubernetes supports several container runtimes via CRI-O

Node Agent : kubelet

A kubelet is a middleware agent between Container Runtime and API Server that runs on each node(including the control plane). It receives Pod definitions from API server and also periodically communicates with control plane . It monitors health and resources of Pods and communicates them to Control Plane.

The kubelet connects to container runtimes through a plugin-based interface - the Container Runtime Interface (CRI). The CRI consists of protocol buffers, gRPC API, libraries, and additional specifications and tools. To connect to interchangeable container runtimes, kubelet uses a CRI shim, an application which provides a clear abstraction layer between kubelet and the container runtime.

What is a Shim ?

Shims are Container Runtime Interface (CRI) implementations, interfaces or adapters, specific to each container runtime supported by Kubernetes. Some examples of CRI shims:

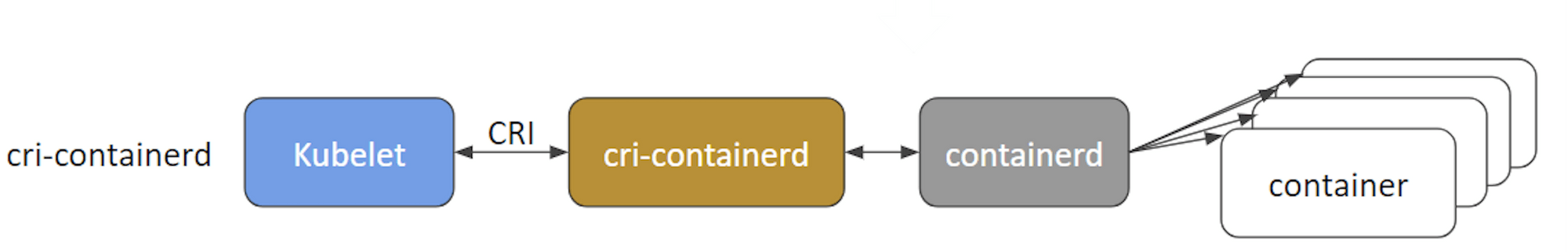

cri-containerd

cri-containerd allows containers to be directly created and managed with containerd at kubelet's request:

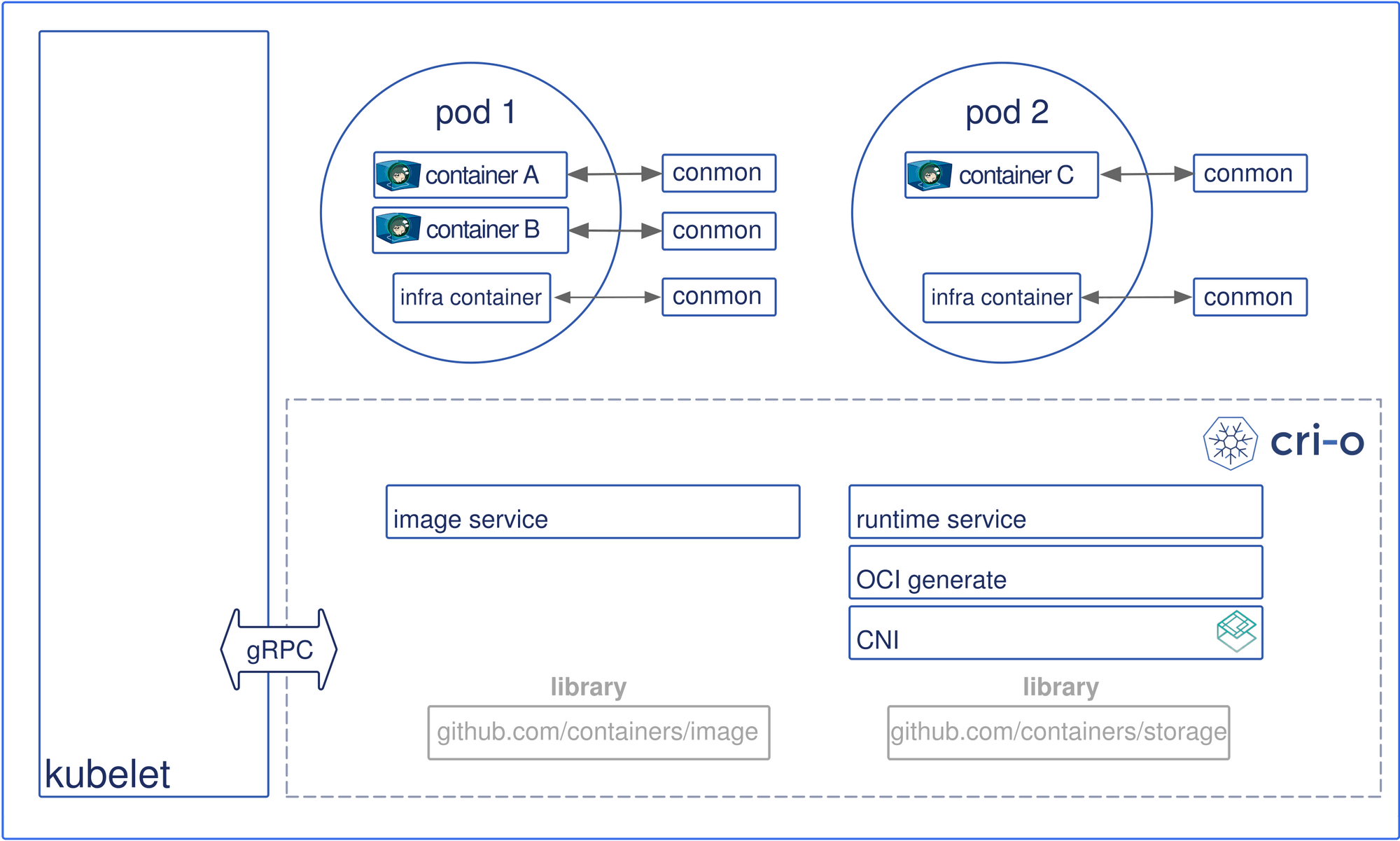

CRI-O

CRI-O enables the use of any Open Container Initiative (OCI) compatible runtime with Kubernetes, such as runC, containerd etc. :

Addons

Addons are cluster features and functionality not yet available in Kubernetes, therefore implemented through 3rd-party pods and services.

DNS

- Cluster DNS is a DNS server required to assign DNS records to Kubernetes objects and resources.

Dashboard

- A general-purpose web-based user interface for cluster management.

Monitoring

- Collects cluster-level container metrics and saves them to a central data store.

Logging

- Collects cluster-level container logs and saves them to a central log store for analysis.

Networking in Kubernetes

The Kubernetes components interact with each other via Linux Network Namespaces and SDN (Software Defined Networking) tools such as CNI(Container Network Interface). Namespaces are one of the Linux Kernel’s Virtualisation features used to isolate system resources across processes. Many in-depth details have been omitted and will be covered in my future blogs on Kubernetes Networking

Communication between Container-to-Container

This is achieved via Linux Namespaces. The containers share the same network namespace as the Pod. This creation of namespaces is done by Pause Containers. When a grouping of containers is started by Pod on its creation it starts these for this sole purpose. All containers inside the pod communicate with one another using this namespace.

Communication between Node-to-Node

In the Kubernetes networking model Pods are scheduled on node in an unpredictable fashion i.e. Pods are continuously created and destroyed in the cluster as the cluster tries to move to a desired state. Now, they are expected to communicate with each other that too without any setup of NAT (Network Address Translation).

Kubernetes solves this problem by simplifying its Networking Model by treating pods like VMs. It implements them like a network of connected VMs on a network. Each Pod receives its unique IP address through which they communicate with each other like VMs on a network.

How do containers communicate with traffic outside the Pod?

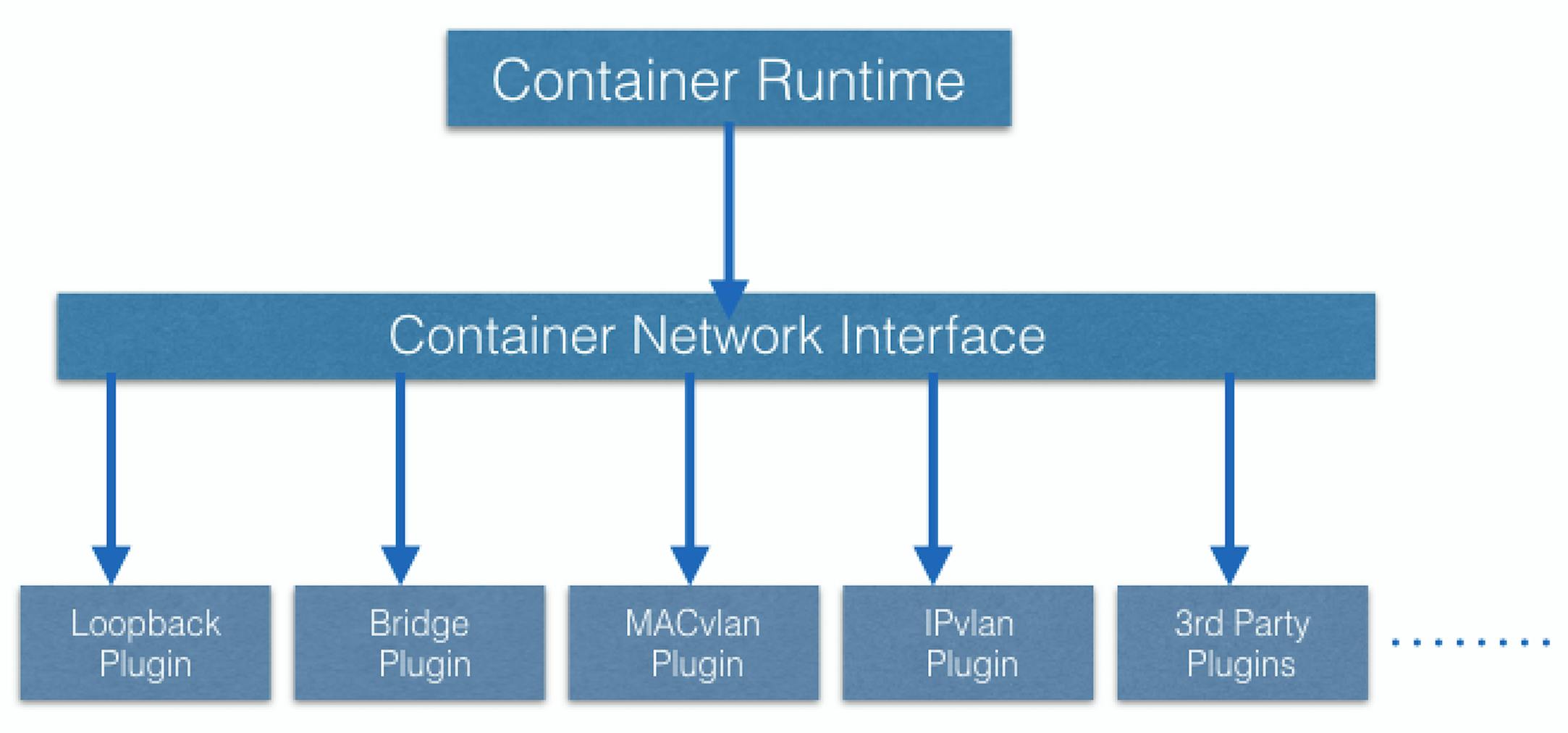

This functionality is achieved using CNI (Container Network Interface) which integrates it with the Kubernetes Networking Model . CNI is a set of specifications and libraries which allow plugins to configure the networking for containers. While there are a few core plugins, most CNI plugins are 3rd-party Software Defined Networking (SDN) solutions implementing the Kubernetes networking model. In addition to addressing the fundamental requirement of the networking model, some networking solutions offer support for Network Policies. Flannel, Weave, Calico are only a few of the SDN solutions available for Kubernetes clusters.

Communication between Pod-to-External-World

This is achieved via Services as their external IP remains constant. Complex network routing is handled by kube-proxy in each node. By exposing services to the external world with the aid of kube-proxy, applications become accessible from outside the cluster over a virtual IP address and a dedicated port number.